When Black Death Goes Viral: How Algorithms of Oppression (Re)Produce Racism and Racial Trauma

By Dr. Tanksley

When George Floyd was murdered by police in 2020, his 9-minute death video was viewed over 1.4 billion times online. Likewise, the live stream of Philando Castile being shot by police accumulated over 2.4 million views in just 24 hours. After Sandra Bland was found dead following a minor traffic violation, bodycam footage of her horrific police encounter garnered hundreds of thousands of views in a few short days.

Concerned about how seeing images of Black people dead and dying would affect young social media users, I conducted a study to understand how digitally mediated traumas were impacting Black girls’ mental and emotional wellness. As I completed interviews with nearly 20 Black girls (ages 18-24) across the US and Canada, my initial fears were quickly confirmed: Black girls reported unprecedented levels of anxiety, depression, fear and chronic stress from encountering Black death online. The most common phrases participants used were “traumatizing,” “exhausting” and “PTSD.” Many of the girls endured mental, emotional and physiological effects, including insomnia, migraines, nausea, prolonged “numbness” and dissociation. According to Natasha, “seeing Black people get killed by police, that does something to you psychologically.” Similarly, Evenlyn said, "When people die or when Black males are shot by the police and people post the video, that’s traumatizing. Last summer…everybody would post everything, and I was just sad and exhausted.” Danielle surmises the collective trauma, noting “we all have PTSD…because of social media, because of all this constant coverage…emotionally I can say it has taken a toll on me. It’s taken a toll on all of us.” Mental health concerns directly affected schooling experiences, with many girls too overwhelmed, triggered or physically exhausted to fully engage in academics following high profile police killings.

Though viral death videos were amongst the most salient causes of digitally mediated trauma, they weren’t the only source of race-related stress for Black girls online. The girls in my study navigated a multifaceted web of anti-Black racism posing constant threats to their physical safety and mental wellness. After posting about white supremacy and anti-Blackness, Danielle received “hundreds of thousands [of] people in my DM’s calling me a lot of names I had never heard before in my life…When these messages keep coming in and people keep calling you [racial slurs] for pointing out a fact… That just takes a toll on you.”

Although Danielle reported the digital attacks through Twitter’s automated content moderation program, her reports were systematically denied or ignored, so that, her only remaining method of protecting herself was to simply “avoid social media for a while.” The same was true for other participants whose posts about racialized grief, grassroots organizing and the need to dismantle white supremacy following high profile killings were flagged as “hate speech” and “inciting violence” by the same algorithms that determined anti-Black slurs and death threats were protected expressions of free speech.

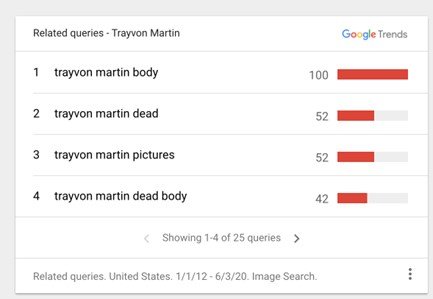

When algorithms designed to protect against racism and hate speech identify calls to end anti-Black violence as a threat to community safety, but select videos of Black people dead and dying to be hyper-circulated without censorship or content warnings, it reveals how technologies replicate the racial logics that produce, fetishize and profit from Black death and dying. Machine learning algorithms ensure that the most grotesque, racially violent and “click worthy” content is competitively priced and easily found via keyword auctions and content monetization programs. According to Google Trends, the state-sanctioned killing of Black Americans is amongst the most popular searches in Google’s history. Whether it’s George Floyd, Philando Castile, or Eric Brown, the most popular keyword searches for victims of police brutality are always the same: “death video,” “chokehold,” “shooting video,” “dead body” [Image 1.1 & 1.2]. When images of Black people being killed by police garner over 2.4 million clicks in 24 hours, and the average “cost per click” for related content reaches $6 per click [Image 1.3], the virality of Black death is not only incentivized, but nearly guaranteed.

Image 1.1 - Top Search Queries for “Trayvon Martin”

Content moderation systems reify racially violent necro-politics when “protected categories” based on race, sex, gender, religion, ethnicity, sexual orientation, and serious disability/disease, fail to protect subsets of these categories. According to ProPublica’s study of Facebook [Image 1.4], “White men are considered a [protected] group because both traits are protected, while female drivers and black children, like radicalized Muslims, are subsets, because one of their characteristics is not protected.” By these rules, a post admonishing white men for murdering Black people would immediately be flagged as hate speech, while raced and gendered slurs against Black youth would be upheld as “legitimate political expression”. In my study, Black girls’ posts were silenced not because they threaten the community, but because they threaten the white supremacist patriarchy. Meanwhile, Black death goes viral not because it will bring about racial justice or an end to police brutality, but because Black death is Big Business.

Article Details

Race, Education and #BlackLivesMatter: How Online Transformational Resistance Shapes the Offline Experiences of Black College-Age Women

Tiera Tanksley

First Published May 11, 2022

DOI: 10.1177/00420859221092970

Urban Education

About the Author